Hierarchical visual tracking

| 3 of 13 | << First | < Previous | Next > | Last >> | Back to gallery |

A feature fusion framework for object tracking in video sequences is presented.

- The bayesian tracking equations are extended to account for multiple object models.

- A particle filter algorithm is developed to tackle the multi-modal distributions emerging from cluttered scenes.

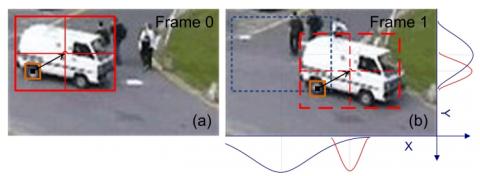

- To estimate the parameters of the main model which fully describes the target, several auxiliary models are used. The parameter update for each model takes place hierarchically so that the lower dimensional models, which are updated first, can guide the search in the parameter space of the subsequent models to relevant regions thus reducing the computational complexity.

- A method for dynamic model adaptation based on the sub-model contribution to the tracking is also developed.

- The likelihood for each object model is calculated using one or more visual cues which increases the robustness of the proposed algorithm.

- We applied the proposed framework by fusing salient points, blobs, and edges as features and verify experimentally its effectiveness in challenging conditions.

- Log in to post comments